Using Elasticsearch as Log Storage in Laravel

Introduction⌗

In actual development, we often need to query logs when debugging. The traditional approach requires SSH into the production environment, then using commands like cat, tail, and grep to query logs. This method doesn’t allow for log statistics and analysis, making it difficult to deeply mine the value of these logs.

This article focuses on elegantly enabling Laravel to write logs directly to Elasticsearch, though you can also choose to use Logstash to collect Laravel’s local logs.

For environment setup, you can refer to the blog posts “Elastic Stack - Elasticsearch” and “Elastic Stack - Kibana”.

Required Dependencies⌗

- elasticsearch/elasticsearch

- betterde/logger (if needed)

Principle Analysis⌗

monolog⌗

Laravel uses monolog/monolog as the default log processing module. Monolog comes with many Handlers, including ElasticsearchHandler, which we will introduce later.

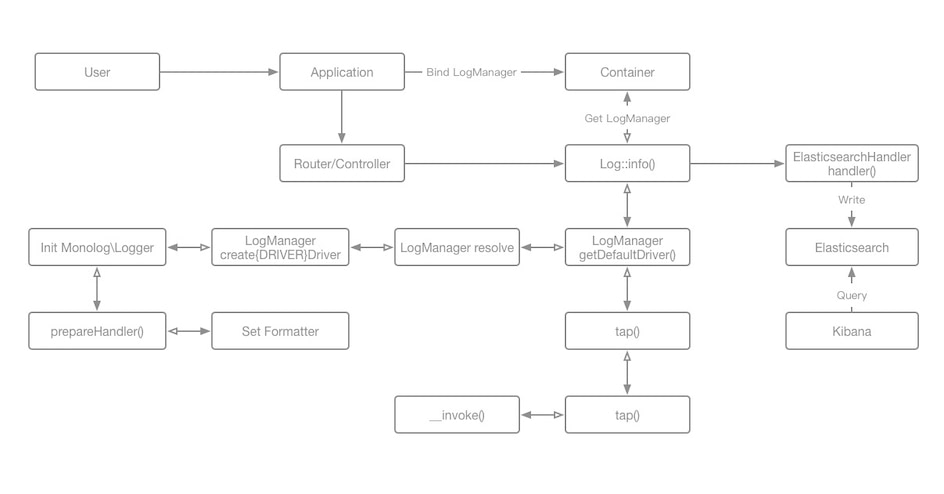

Lifecycle⌗

- In the constructor of

Illuminate\Foundation\Application, basicservice providersare registered, includingIlluminate\Log\LogServiceProvider. - In

Illuminate\Log\LogServiceProvider,Illuminate\Log\LogManageris registered in the container, but no Logger exists yet. - When we use

Illuminate\Support\Facades\Log::info(), LogManager calls a series of internal methods to create Logger and its required Handlers based on the configuration file. - Finally, it calls the Logger’s info(), error(), debug(), and other methods to implement the logging functionality.

Simple Adaptation⌗

You can write logs directly to Elasticsearch by simply modifying the config/logging.php and .env files:

'elastic' => [

'driver' => 'monolog',

'level' => 'debug',

'name' => 'Develop',

'tap' => [],

'handler' => ElasticsearchHandler::class,

'formatter' => \Monolog\Formatter\ElasticsearchFormatter::class,

'formatter_with' => [

'index' => 'monolog',

'type' => '_doc'

],

'handler_with' => [

'client' => \Elasticsearch\ClientBuilder::create()->setHosts(['http://localhost:9200'])->build(),

],

],

LOG_CHANNEL=elastic

Package⌗

You’ll notice that when we write logs, Laravel does it synchronously one by one, which will affect performance when using original storage. So we need to temporarily save all log information during the entire lifecycle and trigger synchronous or asynchronous batch writing to storage before the request ends.

For this purpose, I wrote an extension package over the weekend called betterde/logger. If you have any issues while using it, you can submit an Issue or PR on Github.

Installation⌗

$ composer require betterde/logger

$ php artisan vendor:publish --tag=betterde.logger

Configuration⌗

<?php

return [

/*

* Whether to enable batch writing, middleware needs to be set

*/

'batch' => false,

/*

* Whether to use queue

*/

'queue' => false,

/*

* Log level, values can be referenced from the definitions in Monolog\Logger.php

*/

'level' => 200,

/*

* Whether to circulate log data among multiple Handlers

*/

'bubble' => false,

/*

* Elasticsearch DB

*/

'elasticsearch' => [

'hosts' => [

[

/*

* host is required

*/

'host' => env('ELASTICSEARCH_HOST', 'localhost'),

'port' => env('ELASTICSEARCH_PORT', 9200),

'scheme' => env('ELASTICSEARCH_SCHEME', 'http'),

'user' => env('ELASTICSEARCH_USER', null),

'pass' => env('ELASTICSEARCH_PASS', null)

],

],

'retries' => 2,

/*

* Certificate path

*/

'cert' => ''

],

/*

* Handler settings

*/

'options' => [

'index' => 'monolog', // Elastic index name

'type' => '_doc', // Elastic document type

'ignore_error' => false, // Suppress Elasticsearch exceptions

],

/*

* Whether to record trace details for exception logs

*/

'exception' => [

'trace' => false,

],

/*

* Extended attributes, used to distinguish multiple projects using the same Elasticsearch Index,

* all Keys in the extra array can be customized, I'm just giving examples here

*/

'extra' => [

'host' => 'example.com',

'php' => '7.3.5',

'laravel' => '6.5.2'

]

];

Now you can enjoy the pleasure of writing CRUD.

I hope this is helpful, Happy hacking…