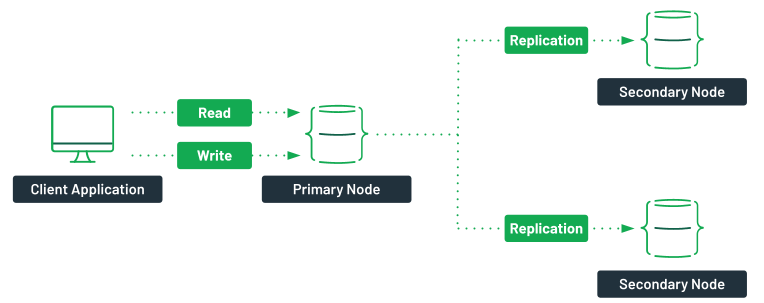

MongoDB 系列之 ReplicaSet 集群部署

环境介绍⌗

- Multipass VMs

- mongo:latest

有关 Multipass 的介绍可以看我的另一篇文章《跨平台 Ubuntu VMs 管理工具》。

安装节点⌗

multipass launch docker --name cluster-01 --disk 40G --cpus 2 --mem 4G

multipass launch docker --name cluster-02 --disk 40G --cpus 2 --mem 4G

multipass launch docker --name cluster-03 --disk 40G --cpus 2 --mem 4G

Name State IPv4 Image

cluster-01 Running 10.0.8.4 Ubuntu 22.04 LTS

172.17.0.1

cluster-02 Running 10.0.8.5 Ubuntu 22.04 LTS

172.17.0.1

cluster-03 Running 10.0.8.6 Ubuntu 22.04 LTS

172.17.0.1

可以看到虚拟机部署完成后,IP 分别为 10.0.8.4、10.0.8.5、10.0.8.6。

获取 MongoDB 最新镜像⌗

在每个节点上拉取 MongoDB 的最新 Docker 镜像:

docker pull mongo:latest

启动 MongoDB 节点⌗

multipass shell cluster-01

docker run -d --name mongodb --net host mongo:latest mongod --replSet betterde --bind_ip 10.0.8.4

multipass shell cluster-02

docker run -d --name mongodb --net host mongo:latest mongod --replSet betterde --bind_ip 10.0.8.5

multipass shell cluster-03

docker run -d --name mongodb --net host mongo:latest mongod --replSet betterde --bind_ip 10.0.8.6

–replSet 指定 ReplicaSet 的名称,并分别在三个 VMs 上启动对应的 mongod 服务。

初始化 ReplicaSet⌗

# 登录 VMs 节点

multipass shell cluster-01

# 进入 MongoDB 容器

docker exec -it mongodb bash

# 使用 mongosh 连接 MongoDB 数据库

mongosh

# 初始化 ReplicaSet

rs.initiate()

# 添加节点

rs.add("10.0.8.5:27017")

rs.add("10.0.8.6:27017")

到这里不出意外的话,集群就算搭建成功了,我们可以使用下面的命令查看集群状态:

rs.status();

{

set: 'betterde',

date: ISODate("2022-10-17T10:21:08.985Z"),

myState: 1,

term: Long("1"),

syncSourceHost: '',

syncSourceId: -1,

heartbeatIntervalMillis: Long("2000"),

majorityVoteCount: 2,

writeMajorityCount: 2,

votingMembersCount: 3,

writableVotingMembersCount: 3,

optimes: {

lastCommittedOpTime: { ts: Timestamp({ t: 1666002061, i: 1 }), t: Long("1") },

lastCommittedWallTime: ISODate("2022-10-17T10:21:01.056Z"),

readConcernMajorityOpTime: { ts: Timestamp({ t: 1666002061, i: 1 }), t: Long("1") },

appliedOpTime: { ts: Timestamp({ t: 1666002061, i: 1 }), t: Long("1") },

durableOpTime: { ts: Timestamp({ t: 1666002061, i: 1 }), t: Long("1") },

lastAppliedWallTime: ISODate("2022-10-17T10:21:01.056Z"),

lastDurableWallTime: ISODate("2022-10-17T10:21:01.056Z")

},

lastStableRecoveryTimestamp: Timestamp({ t: 1666002061, i: 1 }),

electionCandidateMetrics: {

lastElectionReason: 'electionTimeout',

lastElectionDate: ISODate("2022-10-17T09:47:06.626Z"),

electionTerm: Long("1"),

lastCommittedOpTimeAtElection: { ts: Timestamp({ t: 1666000026, i: 1 }), t: Long("-1") },

lastSeenOpTimeAtElection: { ts: Timestamp({ t: 1666000026, i: 1 }), t: Long("-1") },

numVotesNeeded: 1,

priorityAtElection: 1,

electionTimeoutMillis: Long("10000"),

newTermStartDate: ISODate("2022-10-17T09:47:06.643Z"),

wMajorityWriteAvailabilityDate: ISODate("2022-10-17T09:47:06.655Z")

},

members: [

{

_id: 0,

name: '10.0.8.4:27017',

health: 1,

state: 1,

stateStr: 'PRIMARY',

uptime: 2200,

optime: { ts: Timestamp({ t: 1666002061, i: 1 }), t: Long("1") },

optimeDate: ISODate("2022-10-17T10:21:01.000Z"),

lastAppliedWallTime: ISODate("2022-10-17T10:21:01.056Z"),

lastDurableWallTime: ISODate("2022-10-17T10:21:01.056Z"),

syncSourceHost: '',

syncSourceId: -1,

infoMessage: '',

electionTime: Timestamp({ t: 1666000026, i: 2 }),

electionDate: ISODate("2022-10-17T09:47:06.000Z"),

configVersion: 6,

configTerm: 1,

self: true,

lastHeartbeatMessage: ''

},

{

_id: 1,

name: '10.0.8.5:27017',

health: 1,

state: 2,

stateStr: 'SECONDARY',

uptime: 2006,

optime: { ts: Timestamp({ t: 1666002061, i: 1 }), t: Long("1") },

optimeDurable: { ts: Timestamp({ t: 1666002061, i: 1 }), t: Long("1") },

optimeDate: ISODate("2022-10-17T10:21:01.000Z"),

optimeDurableDate: ISODate("2022-10-17T10:21:01.000Z"),

lastAppliedWallTime: ISODate("2022-10-17T10:21:01.056Z"),

lastDurableWallTime: ISODate("2022-10-17T10:21:01.056Z"),

lastHeartbeat: ISODate("2022-10-17T10:21:08.915Z"),

lastHeartbeatRecv: ISODate("2022-10-17T10:21:08.916Z"),

pingMs: Long("2"),

lastHeartbeatMessage: '',

syncSourceHost: '10.0.8.4:27017',

syncSourceId: 0,

infoMessage: '',

configVersion: 6,

configTerm: 1

},

{

_id: 2,

name: '10.0.8.6:27017',

health: 1,

state: 2,

stateStr: 'SECONDARY',

uptime: 2001,

optime: { ts: Timestamp({ t: 1666002061, i: 1 }), t: Long("1") },

optimeDurable: { ts: Timestamp({ t: 1666002061, i: 1 }), t: Long("1") },

optimeDate: ISODate("2022-10-17T10:21:01.000Z"),

optimeDurableDate: ISODate("2022-10-17T10:21:01.000Z"),

lastAppliedWallTime: ISODate("2022-10-17T10:21:01.056Z"),

lastDurableWallTime: ISODate("2022-10-17T10:21:01.056Z"),

lastHeartbeat: ISODate("2022-10-17T10:21:08.915Z"),

lastHeartbeatRecv: ISODate("2022-10-17T10:21:08.912Z"),

pingMs: Long("2"),

lastHeartbeatMessage: '',

syncSourceHost: '10.0.8.4:27017',

syncSourceId: 0,

infoMessage: '',

configVersion: 6,

configTerm: 1

}

],

ok: 1,

'$clusterTime': {

clusterTime: Timestamp({ t: 1666002061, i: 1 }),

signature: {

hash: Binary(Buffer.from("0000000000000000000000000000000000000000", "hex"), 0),

keyId: Long("0")

}

},

operationTime: Timestamp({ t: 1666002061, i: 1 })

}

通过 rs.status() 命令可以看到目前集群的三个节点都已经正常启动了。

I hope this is helpful, Happy hacking…